* feat: one buffer for each task * feat: support "one buffer for each task" for async * make kv_cache_dtype configurable Signed-off-by: Tiwei Bie <tiwei.btw@antgroup.com> * style: use plural form fix: use _seed_from_key to set different seeds for data loaders fix: call load_data for one buffer each time * PullRequest: 125 Support running async experiments in the 2407 image. Merge branch fw/async2407 of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/125 Signed-off-by: 晓雷 <meizhiyu.mzy@antgroup.com> * . * fix: handle multiple datasets in recover indices fix: `isinstance(self.__datasets, PullerStreamDataset)` feat: use the "spec" request to obtain the number of datasets fix: revert rollout worker * fix: revert async_rl_exp.py * fix flag for list (cuda_graph_bs) * format * [FIX] fix async task reward [sglang bf16-> fp16] * fix: define `self.__datasets` in advance * PullRequest: 130 [Refactor] Remove deprecated search related code Merge branch mzy/remove-search of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/130 Signed-off-by: 博惟 <bowei.fw@antgroup.com> * remove search related * PullRequest: 131 [Refactor] Change terminology "model parallel" into "tensor parallel" to align with megatron. Merge branch mzy/mp-to-tp of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/131?tab=comment Signed-off-by: 博惟 <bowei.fw@antgroup.com> * change mp to tp * . * . * PullRequest: 142 Fix an error for megatron backend destroy Merge branch fw/fix-meagatron-destroy of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/142 Reviewed-by: 晓雷 <meizhiyu.mzy@antgroup.com> * . * PullRequest: 143 Fix the port conflict issue of generation servers Merge branch fw/fix-gen-port of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/143?tab=comment Reviewed-by: 晓雷 <meizhiyu.mzy@antgroup.com> * somehow fix the port issue * add clearance period * . * . * PullRequest: 145 Add code environment Merge branch fw/code-env of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/145?tab=comment Reviewed-by: 晓雷 <meizhiyu.mzy@antgroup.com> * add code env * somehow fix the port issue * fix * PullRequest: 144 Add decoupled PPO loss Merge branch fw/decoupled-ppo-loss of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/144?tab=comment Reviewed-by: 晓雷 <meizhiyu.mzy@antgroup.com> * fix ppo step logging, nan in stats tracker, and add decoupled loss * . * somehow fix the port issue * fix typo * PullRequest: 146 Merge SLURM logs and save experiment configs in yaml format. Merge branch fw/better-logging of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/146 Reviewed-by: 晓雷 <meizhiyu.mzy@antgroup.com> * merge all slurm logs into one * write config to yaml * PullRequest: 141 Merge changes during NeurIPS submission Merge branch fw/async-dev of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/141 Reviewed-by: 晓雷 <meizhiyu.mzy@antgroup.com> * . * . * . * . * . * . * . * . * . * update script * . * . * . * . * [ADD] add least req scheduling * fix test genreq * . * . * fix stats tracker nan * . * . * . * . * . * . * . * uppper clip decoupled objective * add throughput exp script * . * remove behav upper clip param * . * . * . * plot curve * update thpt script * . * master worker raise error when exiting * update script * add gen throughput logging * . * . * add decoupled wandb data * . * fix port issue and add no training option * . * enlarge ttl * remove gserver manager await staled * update weights in groups * . * . * . * add port clearance period * . * . * . * add plot script * add sft throughput eval * . * log tokens in null interface * 消融实验和interruptible generation * 画图脚本/运行脚本/数据结果 * . * remove scripts * add port test * remove force_sync_reward * revert some changes * . * revert * revert fix * fix * revert * fix typo * support qwen3 training * PullRequest: 147 Support interruption in SGLang and fix a KeyError in gather-scatter communication Merge branch fw/sglang046-with-abort-request of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/147?tab=diff Reviewed-by: 晓雷 <meizhiyu.mzy@antgroup.com> * fix ppo step logging, nan in stats tracker, and add decoupled loss * . * somehow fix the port issue * initial commit * add interupt request * fix data transfer issue * max concurrent rollouts defaults to train batch size * merge main * add patch * fix patch typp * revert sglang * fix typo * fix minor typo * . * pip show editable sglang path * PullRequest: 149 fix: code faas max_retries Merge branch xss/fix_code_verifier of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/149 Reviewed-by: 博惟 <bowei.fw@antgroup.com> * fix: code faas max_retries * PullRequest: 150 [Bug Fix] Fix key errors in `_run_scatter` in data transfer Merge branch mzy/fix-scatter-groups of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/150 Reviewed-by: 博惟 <bowei.fw@antgroup.com> * fix scatter groups key error * fix test * . * PullRequest: 151 Fix Qwen3 import error when using transformers with a lower version Merge branch fw/fix-qwen3 of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/151 Reviewed-by: 温差 <xushusheng.xss@antgroup.com> * merge all slurm logs into one * write config to yaml * . * PullRequest: 152 Support sglang0.4.6 and fix master_worker import error Merge branch adopt_sglang046 of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/152 Reviewed-by: 博惟 <bowei.fw@antgroup.com> * Support sglang0.4.6 and fix master_worker import error * remove disable_mla option * PullRequest: 155 [FIX] reduce port conflicts Merge branch sxj/reduce_port_conflict of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/155 Reviewed-by: 博惟 <bowei.fw@antgroup.com> * [FIX] reduce port conflicts * PullRequest: 153 Fix stuck and recover issues for async experiments Merge branch fw/stable-async of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/153 Reviewed-by: 晓雷 <meizhiyu.mzy@antgroup.com> * fix sample cnt stuck * fix recover * code cleanup * merge all slurm logs into one * write config to yaml * . * . * . * revert birth time change * . * enlarge sock connect timeout * PullRequest: 158 [Fix] Fix the error where "accepted" is not defined Merge branch fw/fix-rollout-accepted of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/158 Reviewed-by: 温差 <xushusheng.xss@antgroup.com> * . * PullRequest: 154 Fix unit tests and simplify package installation Merge branch fw/v0.3.0-tests of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/154?tab=comment Reviewed-by: 晓雷 <meizhiyu.mzy@antgroup.com> * fix some tests * fix tests except for experiments * fix tests * fix tests * . * . * PullRequest: 159 [fix] Enlarge the default aiohttp connection timeout and fix a recover error in model worker Merge branch fw/stable-async of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/159 Reviewed-by: 晓雷 <meizhiyu.mzy@antgroup.com> * fix sample cnt stuck * fix recover * code cleanup * merge all slurm logs into one * write config to yaml * . * . * . * revert birth time change * . * enlarge sock connect timeout * . * PullRequest: 160 set sock_connect as rollout_request_timeout in partial_rollout.py Merge branch xss/rollout_timeout of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/160 Reviewed-by: 博惟 <bowei.fw@antgroup.com> * set sock_connect as rollout_request_timeout in partial_rollout.py * PullRequest: 161 Prioritize rollouts that are submitted earlier rather than arrived earlier Merge branch fw/birth-time of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/161 Reviewed-by: 晓雷 <meizhiyu.mzy@antgroup.com> * . * blocking push * PullRequest: 163 [bugfix] Fix synchronized training when birth time is absent Merge branch fw/fix-sync-birthtime of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/163 Reviewed-by: 晓雷 <meizhiyu.mzy@antgroup.com> * . * PullRequest: 164 [Refactor] Move cluster spec into CLI args Merge branch fw/refactor-cluster-spec of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/164?tab=comment Reviewed-by: 晓雷 <meizhiyu.mzy@antgroup.com> * set cluster spec path in args * . * fix * add default cluster spec * PullRequest: 165 Normally exit all workers after experiment completion Merge branch fw/exit-all-workers of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/165 Reviewed-by: 晓雷 <meizhiyu.mzy@antgroup.com> * . * . * PullRequest: 167 [Feature] Use chunked logits computation to alleviate SGLang OOM Merge branch fw/patch-sglang-oom of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/167 Reviewed-by: 晓雷 <meizhiyu.mzy@antgroup.com> * . * PullRequest: 166 [Feature] Support single-script experiment launch with Ray Merge branch fw/turbolaunch of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/166?tab=comment Reviewed-by: 晓雷 <meizhiyu.mzy@antgroup.com> * add training script without ray name resolve * add ray name resolve * ray worker * run * run async * local run * set cluster spec path in args * . * . * fix * . * . * . * . * . * update config * . * minor renaming * PullRequest: 169 [Doc] Add v0.3.0 docs based on jupyter-book Merge branch fw/doc of git@code.alipay.com:inclusionAI/AReaL.git into main https://code.alipay.com/inclusionAI/AReaL/pull_requests/169 Reviewed-by: 晓雷 <meizhiyu.mzy@antgroup.com> * add docs * refine doc * refine doc --------- Signed-off-by: Tiwei Bie <tiwei.btw@antgroup.com> Co-authored-by: wanghuaijie.whj <wanghuaijie.whj@antgroup.com> Co-authored-by: Tiwei Bie <tiwei.btw@antgroup.com> Co-authored-by: kira.gw <kira.gw@antgroup.com> Co-authored-by: shenxujie.sxj <shenxujie.sxj@antgroup.com> Co-authored-by: 晓雷 <meizhiyu.mzy@antgroup.com> Co-authored-by: sam.gjx <sam.gjx@antgroup.com> Co-authored-by: 温差 <xushusheng.xss@antgroup.com> Co-authored-by: 履渊 <yuhong.gyh@antgroup.com> |

||

|---|---|---|

| .github | ||

| assets | ||

| blog | ||

| csrc | ||

| docs | ||

| evaluation | ||

| examples | ||

| functioncall | ||

| patch/sglang | ||

| realhf | ||

| tests | ||

| training | ||

| .clang-format | ||

| .dockerignore | ||

| .gitignore | ||

| Dockerfile | ||

| LEGAL.md | ||

| LICENSE | ||

| MANIFEST.in | ||

| Makefile | ||

| README.md | ||

| grader.py | ||

| math_verify_utils_qwen.py | ||

| parser.py | ||

| pyproject.toml | ||

| pytest.ini | ||

| requirements.txt | ||

| setup.py | ||

README.md

AReaL: Ant Reasoning Reinforcement Learning for LLMs

AReaL (Ant Reasoning RL) is a fully open-sourced, scalable, and efficient reinforcement learning training system for large language models developed at the RL Lab, Ant Research, built upon the open-source project RealHF. We fully commit to open-source by releasing training details, data, and infra required to reproduce all the models with the desired performances. AReaL aims to help everyone build their own AI agents easily and affordably. Our team loves milk tea as it is delicious, customizable, and affordable. We hope you all enjoy our project just like how you enjoy A-ReaL-milk-tea.🧋

AReaL Highlights

- 🛠️ Open & Reproducible: We will continuously release all code, datasets, and training recipes for RL training LLMs .

- 🚀 Scalability: AReaL can seamlessly adapt to different computational resource settings, ranging from 1 single node to 1K GPUs.

- 🔪 Cutting-Edge Performances: AReaL can produce models with cutting-edge reasoning capabilities. We are actively working on other domains, such as coding and agent, as well.

News

[2025/04/27] 🔥 We've built a documentation website using the amazing DeepWiki tool. Check the link to know and ask about AReaL!

[2025/03/31] (v0.2, Boba) Our milestone release Boba! Please call it A-ReaL-Boba! This release includes much accelerated training with SGLang support and SOTA 7B and 32B models on math reasoning.

[2025/02/24] (v0.1) Our initial release includes reproducible results for 1.5B and 7B LRMs. Check our v0.1 technical blog.

AReaL-boba Milestones and Highlights

In our boba release, we highlight the 3 most important milestones:

- Full SGLang support and a collection of efficiency improvements

- A SOTA 7B math reasoning model AReaL-boba-RL-7B and the corresponing training data AReaL-boba-106k.

- A particularly competitive 32B model AReaL-boba-SFT-32B that can be trained with extremely low cost. (Training Data: AReaL-boba-SFT-200)

For the complete training and model details, please check our v0.2 technical blog.

SGLang support with 1.5x speedup on 7B Training

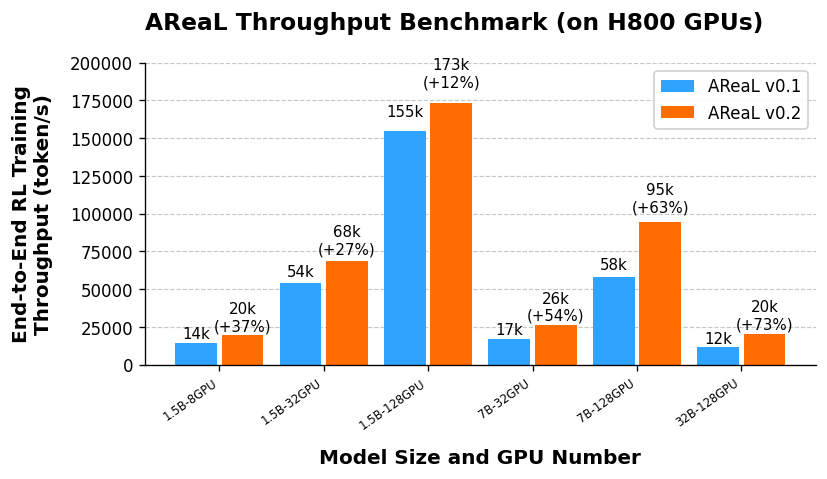

Thanks to a series of system-level optimizations, AReaL v0.2 improves its end-to-end training performance by up to 73%.

In the following table, we show the convergence time under different resource settings:

| Model Size | 1.5B | 1.5B | 1.5B | 7B | 7B | 32B (SFT) |

|---|---|---|---|---|---|---|

| #GPU | 8 | 32 | 128 | 32 | 128 | 64 |

| Step | 250 | 250 | 250 | 400 | 400 | 300 |

| Time (h) | ~240 | ~69 | ~27 | ~252 | ~90 | ~3.5 |

SOTA 7B model using RL in math reasoning

| Model | AIME 2024 | AIME 2025 | GPQA-Diamond |

|---|---|---|---|

| O1-Preview | 56.7 | - | |

| R1-Distill-Qwen-7B | 55.0 | 39.7 | 47.1 |

| Light-R1-7B-DS | 56.7 | 44.9 | 40.9 |

| AReaL-boba-RL-7B 🤗 | 61.9 | 48.3 | 47.6 |

We use R1-Distill-Qwen-7B as our base model. After RL training, the pass@1 scores on AIME 2024 and AIME 2025 improve by 6.9 and 8.6 points, respectively, achieving SOTA performance among 7B models in mathematical reasoning. We have released the training data at AReaL-boba-106k.

Although our dataset primarily consists of math and logic problems, we observed that RL training led to measurable improvements on the challenging STEM benchmark GPQA. We plan to open-source more datasets in the future, including code, STEM, and other domains. All the reported numbers are re-evaluated using our evaluation code with more details in our blog.

Approaching QwQ-32B performances using only 200 data samples

| Model | AIME 2024 |

|---|---|

| R1-Distill-Qwen-32B | 72.6 |

| QwQ-32B | 78.9 |

| AReaL-boba-SFT-32B 🤗 | 78.8 |

Building upon R1-Distill-Qwen-32B, we replicate QwQ-32B's inference performance on AIME 2024 using just 200 data points via Supervised Fine-Tuning (SFT). We have released the training data at AReaL-boba-SFT-200.

Getting Started

Quick Start

# Train the distilled 7B model

python3 -m realhf.apps.quickstart ppo-math \

--config examples/configs/7B-distill/ppo-7B-distill-gpus-128.yaml

# Evaluate the 7B model

python evaluation/eval_and_aggregate.py \

--model_path ${MODEL_PATH} \

--output_path ${OUTPUT_PATH} \

--data_names aime24,aime25 \

--prompt_type AReaL-boba \

--output_path outputs --temperature 1.0

Resources

Future Plan

AReaL is under active development. We will have major releases in a weekly manner. We also highly appreciate efforts from the community as well. Here we highlight our future research and development plan.

System Development

- Support for SGLang.

- RL training with coding problems.

- Asynchronous generation and RL training.

- Optimizations for distributed training: expert parallel and zero-bubble pipelining.

- RL for vision-language models (VLM).

- Function calling and agent capabilities.

Algorithm Development

- RL training receipes for 1.5B and 7B models.

- A complete RL training receipe for 32B models.

- Sample-efficient multi-task RL algorithms.

- Agentic capabilities with end-to-end RL.

- Stable RL training for larger MOE models.

Acknowledgement

We would like to remark that major contributors are from RL Lab at Ant Research and Institute for Interdisciplinary Information Sciences, Tsinghua University.

Our team has also received invaluable assistance from the Super Computing Technology (SCT) team at Ant Group, particularly in large-scale cluster operations and maintenance.

We also appreciate all the pioneer works from the community, particularly the ReaLHF project from OpenPsi Inc. and many other projects, including but not limited to, DeepScaleR, Open-Reasoner-Zero, OpenRLHF, verl, SGLang, QwQ, Light-R1, and DAPO.

Citation

@inproceedings{mei2025real,

author = {Mei, Zhiyu and Fu, Wei and Li, Kaiwei and Wang, Guangju and Zhang, Huanchen and Wu, Yi},

title = {ReaL: Efficient RLHF Training of Large Language Models with Parameter Reallocation},

booktitle = {Proceedings of the Eighth Conference on Machine Learning and Systems,

MLSys 2025, Santa Clara, CA, USA, May 12-15, 2025},

publisher = {mlsys.org},

year = {2025},

}

@misc{areal2025,

author = {RL Lab, Ant Research},

title = {AReaL: Ant Reasoning RL},

year = {2025},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/inclusionAI/AReaL}},

}